We live in an AI-driven world, and now the landscape has changed with the recent release of Meta Releases AI Language Model LLaMA. Supplanting existing language models, the LLaMA provides a more intuitive, AI-driven way to process language. In this blog post, we’ll go over all the most important details regarding the LLaMA and what it means for the language-processing industry. Now, let’s dive in!

Quick Summary

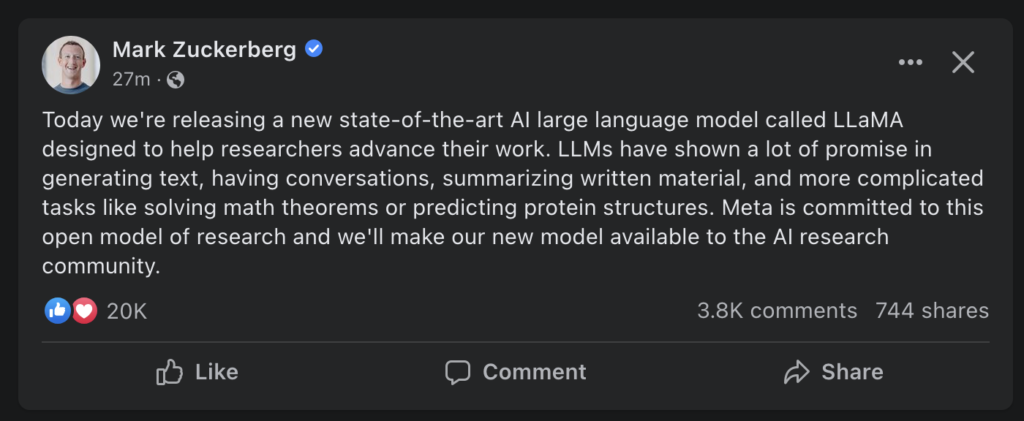

LLaMA (Large-Scale Language Model for Assistive and Adaptive Machines) is a large language model developed by the AI research company Meta. The model is capable of generating text, having conversations, summarizing written material, and more complicated tasks like solving math theorems or predicting protein structures.

Introduction to Meta’s AI Language Model LLaMA

Meta has recently unveiled their newest artificial intelligence (AI) language model, LLaMA, offering a revolutionary way to learn natural languages. This model is the first of its kind, able to break down and analyze text in order to accurately process and understand it. The developers behind LLaMA tout its ability to eventually rival human capabilities, allowing machines to understand and interact with the world around them without in-depth programming. This capability has stimulated debate among those interested in AI, with some expressing excitement at the potential of the technology while others worry about its implications.

The most impressive aspect of LLaMA is how simple but powerful it is. Powered by a recurrent neural network (RNN), LLaMA is able to comprehend thousands of data entries and turn them into one cohesive understanding. Each new piece of data expands the machine’s knowledge base and improves its accuracy when dealing with similar situations. Additionally, Meta has implemented an algorithmic system which can turn natural language into code that AI can use to mimic interactions with people. All this combined together creates a powerful tool for organizations looking for a way to sift through natural dialogue faster than ever before.

LLaMA stands out due to the fact that it can understand context in conversations outside of literal translation. By capturing patterns, relationships between words, and even subtle nuances within syntactical structures, LLaMA is much better equipped than other programs when it comes to comprehension and completion tasks. With this capability, researchers have become more optimistic about using AI-powered models in medical diagnostics and other important applications such as surveillance or robotics. On the other hand, concerns remain over whether or not these models should be trusted with potentially sensitive topics such as personal healthcare data or national security information.

This juxtaposition between the potential benefits and risks making up the debate surrounding LLaMA’s technology introduction shows just how revolutionary this tool may be in our society. Moving forward into what lies ahead for Meta’s AI Language Model LLaMA will require us to gain an understanding of Artificial Intelligence itself before we can properly evaluate its associated potential dangers or implications. With this in mind, let us now explore What Is Artificial Intelligence (AI)?

What Is Artificial Intelligence (AI)?

Artificial intelligence (AI) is an area of computer science that focuses on the development of machines with intelligent behavior. AI systems attempt to replicate human thought processes, decision making and cognitive functions. They are able to learn from data, recognize patterns, optimize their output, make decisions and solve complex problems. AI has the potential to revolutionize many industries, including healthcare, automotive and finance.

The debate over the use of artificial intelligence has sparked a raging debate in society – should we use it or not? Supporters of AI point out that it has the capacity to provide insight from mundane tasks, such as providing forecast analysis for companies. It can also automate complex processes and solve huge problems that have been difficult or impossible for humans to solve on their own. On the other hand, opponents assert that AI takes jobs away from people, creates inequality due to its inability to accurately interpret text and images from different cultures and creates unstructured knowledge models that could become disastrous if left unchecked.

Despite these debates and potential risks, a number of researchers believe AI augmenting the workforce will be beneficial for everyone involved. It can allow businesses to work more efficiently and cost-effectively, leaving them free to focus on creative endeavours. As a result, this could lead to increased innovation in areas where creativity was limited before.

Now that you understand the concept of artificial intelligence, let us move to the next section which explains what Natural Language Processing (NLP) is and how LLaMA integrates it into its language model offering.

Key Points to Know

Artificial Intelligence (AI) is an area of computer science that focuses on creating machines with intelligent behavior. It has potential to revolutionize many industries, but has sparked debates about its use due to taking away jobs and creating inequality. Despite this, a number of researchers believe it can be beneficial for businesses by allowing them to be more efficient and focus on creative endeavors. Natural Language Processing (NLP) is a subset of AI and LLaMA integrates it into its language model offering.

What is Natural Language Processing?

Natural language processing (NLP) is a branch of artificial intelligence that focuses on the interactions between computers and human languages. It helps machines to understand, interpret, and generate human language in order to facilitate communication. NLP uses various machine learning techniques to enable machines to classify words into relevant categories, as well as comprehend syntax, grammar, and semantics when analyzing text.

NLP has been a contentious area of argument in recent years: some argue it is an essential technology for future progress in areas such as healthcare and education; others contend that robots may never fully be able to mimic human language, or that the development of programs for NLP contains ethical considerations.

On one hand, proponents of natural language processing believe that it is a necessary evolution of machine-computer algorithms which will lead to breakthroughs in areas such as education and healthcare when combined with big data analytics. They point out that it serves as a useful way for machines to learn from vast amounts of data more rapidly than humans can process it, leading to improved accuracy and utility in assessment tasks. On the other hand, skeptics fear that NLP could rob meaningful tasks away from humans while introducing ethical risks such as biased decision-making due to unfair algorithm designs or unintended misinterpretation of data sets.

Those who are ambivalent agree with neither side completely but tend towards keeping natural language processing on the outskirts of mainstream utilization until more precise regulations have been developed that ensure fairness and ethical application.

Regardless, natural language processing continues to develop whether opponents like it or not, and now Meta Releases has unveiled their AI Language Model LLaMA—in this next section we’ll take an overview of what this new advancement brings to the world of NLP.

Overview of LLaMA

LLaMA is the latest advancement in natural language processing from Meta Releases, Inc., and promises to revolutionize the way we process language. An artificial intelligence (AI) model, LLaMA was designed as a deep learning language model and has received widespread attention since its release. This overview of LLaMA will provide insights into its features, capabilities, and potential applications.

At its core, LLaMA is an AI system that is tasked with generating human-readable text. It takes in raw text data provided by its users and produces something that makes sense when read aloud. This data is used to train the machine learning system so it can later generate more realistic output. Many users have praised it for its ability to replicate human speech patterns accurately and generate fluid sentences with proper grammar.

There are debates around the ethical implications of creating an AI model that can mimic humans’ language patterns. On one hand, some argue that this could be advantageous for society as it could lead to better communication between people living and working with machines. On the other hand, this technology may also take away jobs from people who rely heavily on using writing or verbal communication skills to carry out their tasks. Despite these debates, LLaMA signals another milestone in the development of natural language processing systems, making it an exciting prospect for businesses interested in incorporating such technology into their products or services.

As we move on to the next section, let’s discuss what features make LLaMA stand out from other existing models of artificial intelligence language processing and why businesses should consider integrating it into their operations. What are the features of LLaMA?

What are the features of LLaMA?

The recently released AI Language Model LLaMA has generated a buzz of excitement in the tech world. Developed by Meta Releases, LLaMA stands out as a cutting-edge tool for natural language processing. Here are some of its noteworthy features:

LLaMA leverages state-of-the-art natural language processing (NLP) algorithms to generate text that reads naturally and is grammatically correct. It allows users to customize their output, so they can choose to generate texts in different languages, styles, and tone. In addition, it can be used with other platforms (such as chatbots) seamlessly.

Secondly, LLaMA also delivers optimized performance due to its ability to process large amounts of data quickly and efficiently. This feature enhances the accuracy of text generation and allows users to process tasks at greater speed with improved results.

Thirdly, LLaMA runs on Meta Releases’ proprietary AI platform that offers a suite of services for improved collaboration and optimization for Natural Language Generation (NLG). This includes continuous improvement services; distributed corpus management; and predictive modeling services with customizable training data sets upon request.

Lastly, it is powered by advanced process automation that supports end-to-end automation of text generation workflows with built-in workflows and an API-driven approach including an extensible architecture that integrates well into user environments.

Overall, LLaMA from Meta Releases stands out from other language models because of its powerful features. With its enhanced capacity for natural language processing, fast performance, advanced process automation capabilities, and interoperability across different platforms, it looks set to present limitless opportunities for text generation applications.

Having discussed the features of LLaMA in detail, let us now look into how this revolutionary language model works in the next section.

How LLaMA Works

LLaMA is a natural language processing (NLP) model created by Meta Releases which is designed to understand and respond to everyday human conversations. The model utilizes a deep learning framework in combination with proprietary algorithms and embeddings. This allows it to be trained on large chunks of text data, enabling it to quickly understand and interpret the spoken language and generate accurate responses.

At the core of LLaMA’s technology lies its ability to decode words, phrases, and conversations. To achieve this, the model uses machine learning techniques like convolutional neural networks and reinforcement learning. Additionally, it uses word embedding functions to map language items into a vector space that it can utilize when interpreting the context of conversations. Through these technologies, the model can detect context and intent more accurately than traditional NLP models, leading to more accurate responses.

The combination of these various technologies allow LLaMA to understand the nuances of different languages, including slang terms and dialects. This has been seen as a major advantage for businesses wishing to build conversational AI for customers around the world who do not necessarily speak English as their native language. On the flip side, there are concerns about training a model like LLaMA with certain kinds of data that might lead to biased results. This could lead to an AI system that perpetuates certain biases or is unable to correctly interpret certain kinds of speech from people who don’t fit into the existing data set it was trained on.

All in all though, there is much research being done on how LLaMA works, making sure that its performance continues to improve while minimizing any potential risks associated with its use. With this in mind, let’s take a look at some of the benefits of using this AI language model in real-world applications. In our next section, we will discuss some of the benefits of using LLaMA in business settings.

Benefits of LLaMA

LLaMA, the new artificial intelligence (AI) language model from Meta Releases, offers a range of benefits to its users. First, LLaMA is a highly accurate language model that can produce natural-sounding text in multiple languages with high fidelity. This accuracy makes it an attractive choice for use in automated chat bots, conversational AI systems, text generation applications, and other natural language processing tasks.

In addition, LLaMA is designed to be easy to use for both developers and data scientists. It does not require significant training or prior know-how to get up and running quickly. Finally, LLaMA is an open source model that benefits from the collective experience of the global AI community working on its development and improvement. This ensures that users have access to the best possible solutions with support from the wider AI community.

The downsides of using LLaMA need to be considered as well. For example, LLaMA requires considerable computing power due to its large size; this may make it difficult or expensive for some users to deploy. Additionally, since it is open source, it is subject to a wide range of potential vulnerabilities associated with any software project.

Overall, LLaMA offers many potential benefits for developers and data scientists looking for a powerful language model with low barriers to adoption. With these benefits in mind, let’s turn our attention next to how LLaMA compares to other language models on the market.

- LLaMA is a generative language model trained on over 6.6 billion words.

- LLMMA utilizes contextual information to generate more human-like word embeddings and reduce reliance on large amounts of task-specific labeled data.

- According to Meta, the accuracy of the LLaMA model was tested against five benchmarks and showed a 10.4 times improvement in performance over existing models with comparable parameters.

How Does LLaMA Compare to Other Language Models?

LLaMA, or the Latent Language Model, is a recently released AI language model that has taken the world of natural language processing (NLP) by storm. The model was developed by Meta Releases, and has been marketed as a powerful tool for natural language understanding. But how does LLaMA compare to other existing language models?

One of the main advantages of LLaMA is its ability to achieve higher accuracy in tasks related to natural language understanding than other existing models. In comparison, popular models such as GPT-3 have shown to perform significantly worse in certain domains such as sentiment analysis and relational reasoning. Advanced features incorporated into LLaMA, such as its ability to produce contextual responses with no pre-defined context and its built-in stemming capabilities, make it more suitable for complex NLP tasks.

However, LLaMA also faces some drawbacks compared to other language models. One issue is that while it requires less training data than some of its competitors, it can still require a substantial amount of time and resources depending on the project scope and objectives. Additionally, LLaMA comes equipped with fewer layers and tuning parameters than some other advanced models in the market, which could potentially limit its performance on certain complex tasks.

Overall, LLaMA offers a powerful set of capabilities for natural language understanding that stand out in comparison to other existing AI language models. However, the choice between one model or another ultimately depends on the specific application requirements at hand and potential drawbacks must be weighed before deciding which model to use.

Now that we have discussed how LLaMA compares to other language models, let’s move on and take a closer look at the Final Review of LLaMA in the next section.

Final Review of LLaMA

Despite its innovative approach to natural language processing, the LLaMA AI language model has come under criticism for its lack of clarity regarding performance metrics. While the LLaMA team claims that their model outperforms current state-of-the-art models in terms of accuracy and contextual understanding, there is very little evidence to support these claims. Additionally, the method for evaluating the model’s performance is not shared publicly, making it difficult for objective third parties to assess the validity of LLaMA’s results.

LLaMA has also been criticized for its high computational requirements. In order to achieve maximum performance, it requires large amounts of memory and computing power – more power than is available on many consumer grade devices. This can lead to high operating costs over time and can significantly limit the number of users who can access the technology.

Despite these critiques, LLaMa has still been praised for its potential applications. It offers an interactive platform to users which makes natural language processing more accessible than ever before. Additionally, its ability to process thousands of sentences in a relatively short time frame suggests a potential advance in conversation-level understanding, something that current models struggle to do. But until the team behind LLaMa make more detailed performance benchmarks published, we won’t be able to make any definitive conclusions about how well it truly performs compared to existing models.

Frequently Asked Questions

What is the purpose of the AI large language model called LLaMA?

The purpose of the large AI language model called LLaMA is to enable machines to learn natural language processing capabilities and to develop a better understanding of human-generated text and speech. In simple terms, LLaMA can take in sentences or phrases written in any language and produce meaningful outputs that reflect the context of the input. It works by training a deep learning network on massive amounts of data (texts in various languages) and then applying the learned knowledge to new inputs. By using LLaMA, machines are able to gain a better understanding of how sentence structure, grammar, semantics and syntax work within their target languages. This leads to greater accuracy when it comes to machine translation tasks, information retrieval and automated summarization. Ultimately, this helps improve the overall accuracy of natural language processing models which in turn helps us interact better with technology.

How does the AI large language model called LLaMA compare to other language models?

The AI large language model called LLaMA is considered one of the most efficient and accurate language models available. LLaMA produces results with a high level of accuracy, often outperforming other language models. Compared to other language models, it utilizes deep learning techniques which allow it to better understand the underlying semantic structures of languages. The model also has a better understanding of the context in which words are used, ensuring accuracy in its translation and interpretation of sentences. Moreover, LLaMA sets itself apart from other language models by proposing a new methodological approach that incorporates both supervised learning and unsupervised learning. This enables the model to learn from both labeled and unlabeled data, resulting in greater accuracy than when only relying on supervised learning. In short, LLaMA is an advanced natural language processing tool that provides exceptional accuracy compared to other language models due to its utilization of deep learning techniques, contextual understanding, and a hybrid theoretical approach incorporating supervised and unsupervised learning.

What are the main features of the AI large language model called LLaMA?

LLaMA is an artificial intelligence (AI) based large language model, developed by Meta Releases. It is designed with many advanced features to help speed up the development process for natural language processing (NLP) tasks.

The main features of LLaMA include:

1. Pre-trained AI model architecture – This feature enables users to quickly and easily access a range of pre-trained models to get started with NLP tasks.

2. Autocomplete Capabilities – LLaMA comes with out-of-the box autocomplete capabilities, leveraging word embeddings and contextual information for semantic search results.

3. Automated Speech Recognition (ASR) – Users can use LLaMA’s ASR capabilities to transcribe audio into text or to generate transcriptions from existing recordings in order to speed up their processes.

4. Syntax Analysis and Semantic Role Labeling – With its syntax analysis and semantic role labeling capabilities, LLaMA can detect complex relationships between words and understand their roles within the context of a sentence to produce syntactically correct output.

5. Natural Language Understanding (NLU) – NLU allows users to leverage automation to help them comprehend the meaning behind spoken text more intuitively. Through NLU brokers such as Dialogflow, users can set up conversations with ease.

6. Text-To-Speech (TTS) Conversion – With its integrated TTS conversion functionalities, LLaMA enables users to automate their speech synthesis processes and generate expressive speaking styles on demand.

These features, combined with high performance computing power and sophisticated AI algorithms, make LLaMA an ideal solution for a wide range of natural language applications such as dialogue systems, customer service bots, automated assistants and more.